S3 Access in Spark and Databricks

Overview

You can use a library (like Boto 3 in Python) to access

standard Amazon S3 and point it at Immuta to access your data. The integration with Databricks

uses a file system (is3a) that

retrieves your API key and communicates with Immuta as if it were talking directly to S3, allowing users to access

S3 and Azure Blob data sources

through Immuta's s3p endpoint.

Note

This mechanism would never go to S3 directly. To access S3 directly, you will need to expose an S3-backed table or view in the Databricks Metastore as a source or use native workspaces/scratch paths.

Accessing Object-Backed Data Sources in Spark or Databricks

Configure Your Cluster

To use the is3a filesystem, add the following snippet to your cluster configuration:

IMMUTA_SPARK_DATABRICKS_FS_IS3A_PATH_STYLE_ACCESS=true

This configuration is needed to allow any access to is3a on Databricks 7+.

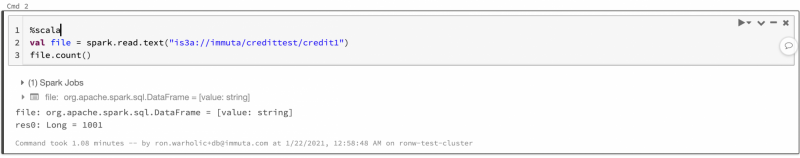

Query Your Data

-

In Databricks or Spark, write queries that access this data by referencing the S3 path (shown in the Basic Information section of the Upload Files modal above), but using the URL scheme

is3a:

Limitations

-

This integration is only available for object-backed data sources. Consequently, all the standard limitations that apply to object-backed data sources in Immuta apply here.

-

Additional configuration is necessary to allow

is3apaths to function as scratch paths. Contact your Immuta support professional for guidance.