Configure Project UDFs Cache Settings

This page outlines the configuration for setting up project UDFs, which allow users to set their current project in Immuta through Spark. For details about the specific functions available and how to use them, see the Use Project UDFs (Databricks) page.

Use Project UDFs in Databricks

Currently, caches are not all invalidated outside of Databricks because Immuta caches information pertaining to a user's current project in the NameNode plugin and in Vulcan. Consequently, this feature should only be used in Databricks.

Web Service and On-Cluster Caches

Immuta caches a mapping of user accounts and users' current projects in the Immuta Web Service and on-cluster. When users change their project with UDFs instead of the Immuta UI, Immuta invalidates all the caches on-cluster (so that everything changes immediately) and the cluster submits a request to change the project context to a web worker. Immediately after that request, another call is made to a web worker to refresh the current project.

To allow use of project UDFs in Spark jobs, raise the caching on-cluster and lower the cache timeouts for the Immuta Web Service. Otherwise, caching could cause dissonance among the requests and calls to multiple web workers when users try to change their project contexts.

Recommended Configuration

1 - Lower Web Service Cache Timeout

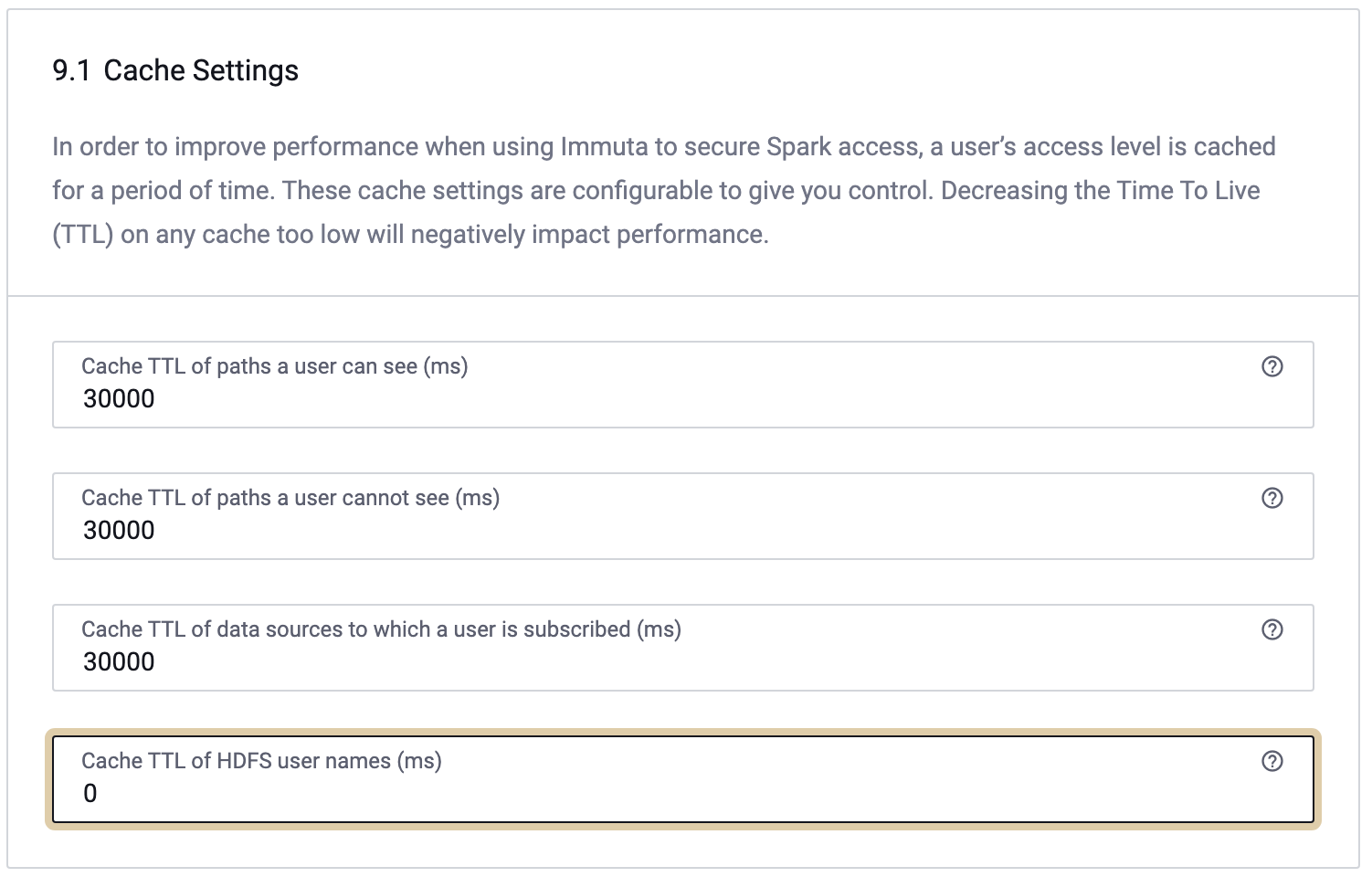

- Click the App Settings icon in the left sidebar and scroll to the HDFS Cache Settings section.

-

Lower the Cache TTL of HDFS user names (ms) to 0.

-

Click Save.

2 - Raise Cache Timeout On-Cluster

In the Spark environment variables section, set the IMMUTA_CURRENT_PROJECT_CACHE_TIMEOUT_SECONDS and

IMMUTA_PROJECT_CACHE_TIMEOUT_SECONDS to high values (like 10000).

Note: These caches will be invalidated on cluster when a user calls immuta.set_current_project, so they can

effectively be cached permanently on cluster to avoid periodically reaching out to the web service.

Blocking UDFs

If your compliance requirements restrict users from changing projects within a session, you can

block the use of Immuta's project UDFs on a Databricks Spark cluster. To do so, configure the

immuta.spark.databricks.disabled.udfs option as described on the

Databricks environment variables page.