Databricks Metastore Magic

Databricks metastore magic allows you to migrate your data from the Databricks legacy Hive metastore to the Unity Catalog metastore while protecting data and maintaining your current processes in a single Immuta instance.

Databricks metastore magic is for customers who intend to use either

- the Databricks Spark with Unity Catalog support integration or

- the Databricks Unity Catalog integration, but they would like to protect tables in the Hive metastore.

Requirement

Unity Catalog support is enabled in Immuta.

Databricks metastores and Immuta policy enforcement

Databricks has two built-in metastores that contain metadata about your tables, views, and storage credentials:

- Legacy Hive metastore: Created at the workspace level. This metastore contains metadata of the configured tables in that workspace available to query.

- Unity Catalog metastore: Created at the account level and is attached to one or more Databricks workspaces. This metastore contains metadata of the configured tables available to query. All clusters on that workspace use the configured metastore and all workspaces that are configured to use a single metastore share those tables.

Databricks allows you to use the legacy Hive metastore and the Unity Catalog metastore simultaneously. However, Unity Catalog does not support controls on the Hive metastore, so you must attach a Unity Catalog metastore to your workspace and move existing databases and tables to the attached Unity Catalog metastore to use the governance capabilities of Unity Catalog.

Immuta's Databricks Spark integration and Unity Catalog integration enforce access controls on the Hive and Unity Catalog metastores, respectively. However, because these metastores have two distinct security models, users were discouraged from using both in a single Immuta instance before metastore magic; the Databricks Spark integration and Unity Catalog integration were unaware of each other, so using both concurrently caused undefined behavior.

Databricks metastore magic solution

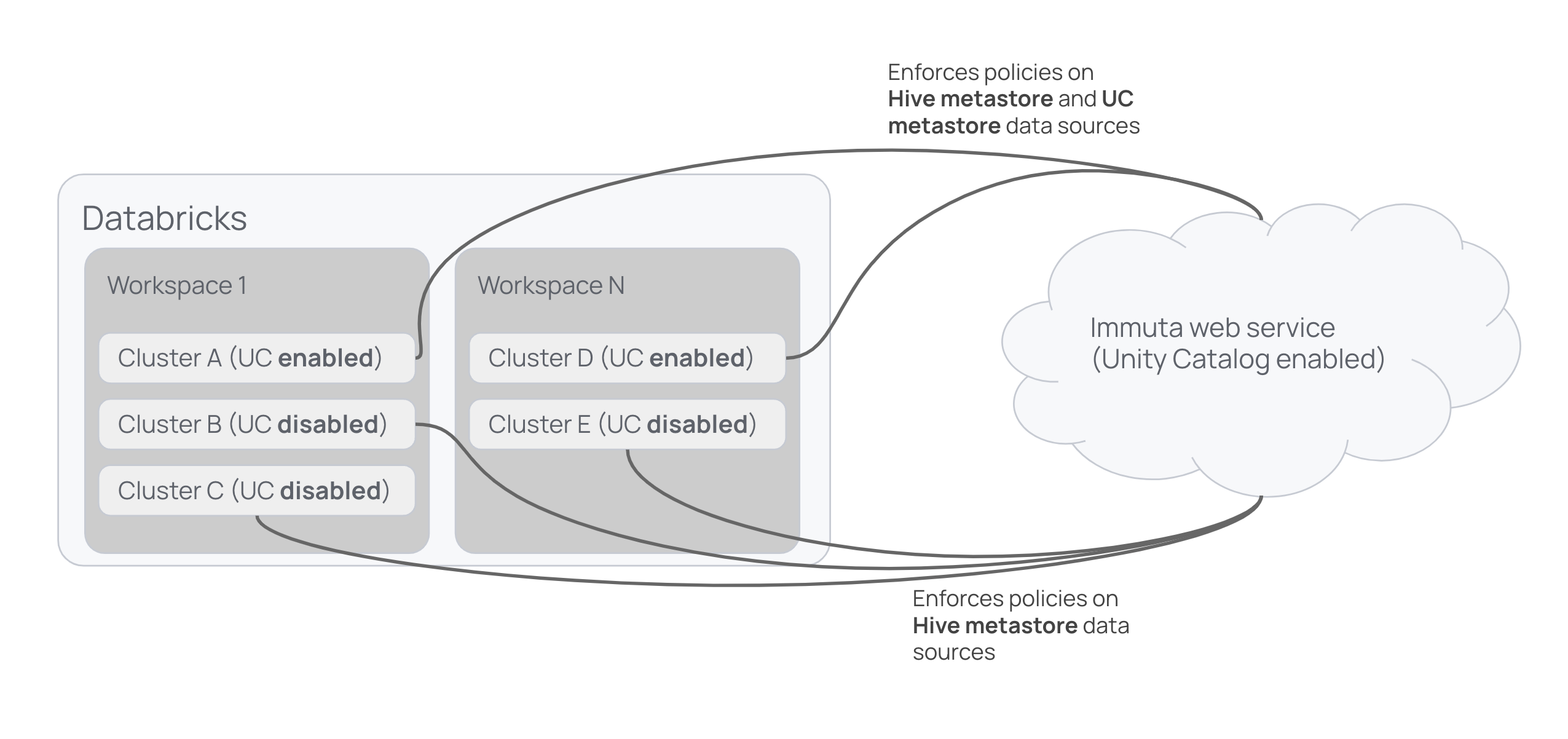

Metastore magic reconciles the distinct security models of the legacy Hive metastore and the Unity Catalog metastore, allowing you to use multiple metastores (specifically, the Hive metastore or AWS Glue Data Catalog alongside Unity Catalog metastores) within a Databricks workspace and single Immuta instance and keep policies enforced on all your tables as you migrate them. The diagram below shows Immuta enforcing policies on registered tables across workspaces.

In clusters A and D, Immuta enforces policies on data sources in each workspace's Hive metastore and in the Unity Catalog metastore shared by those workspaces. In clusters B, C, and E (which don't have Unity Catalog enabled in Databricks), Immuta enforces policies on data sources in the Hive metastores for each workspace.

Enforce policies as you migrate

With metastore magic, the Databricks Spark integration enforces policies only on data in the Hive metastore, while the Databricks Spark integration with Unity Catalog support or the Unity Catalog integration enforces policies on tables in the Unity Catalog metastore. The table below illustrates this policy enforcement.

| Table location | Databricks Spark integration | Databricks Spark integration with Unity Catalog support | Databricks Unity Catalog integration |

|---|---|---|---|

| Hive metastore | |||

| Unity Catalog metastore |

Essentially, you have two options to enforce policies on all your tables as you migrate after you have enabled Unity Catalog in Immuta:

- Enforce plugin-based policies on all tables: Enable the Databricks Spark integration with Unity Catalog support. For details about plugin-based policies, see this overview guide.

- Enforce plugin-based policies on Hive metastore tables and Unity Catalog native controls on Unity Catalog metastore tables: Enable the Databricks Spark integration and the Databricks Unity Catalog integration. Some Immuta policies are not supported in the Databricks Unity Catalog integration. Reach out to your Immuta representative for documentation of these limitations.

Databricks Spark integration with Unity Catalog support and Databricks Unity Catalog integration

Enabling the Databricks Spark integration with Unity Catalog support and the Databricks Unity Catalog integration is not supported. Do not use both integrations to enforce policies on your table.

Enforcing policies on Databricks SQL

Databricks SQL cannot run the Databricks Spark plugin to protect tables, so Hive metastore data sources will not be policy enforced in Databricks SQL.

To enforce policies on data sources in Databricks SQL, use Hive metastore table access controls to manually lock down Hive metastore data sources and the Databricks Unity Catalog integration to protect tables in the Unity Catalog metastore. Table access control is enabled by default on SQL warehouses, and any Databricks cluster without the Immuta plugin must have table access control enabled.

Supported Databricks cluster configurations

The table below outlines the integrations supported for various Databricks cluster configurations. For example, the only integration available to enforce policies on a cluster configured to run on Databricks Runtime 9.1 is the Databricks Spark integration.

| Example cluster | Databricks Runtime | Unity Catalog in Databricks | Databricks Spark integration | Databricks Spark with Unity Catalog support | Databricks Unity Catalog integration |

|---|---|---|---|---|---|

| Cluster 1 | 9.1 | Unavailable | Unavailable | ||

| Cluster 2 | 10.4 | Unavailable | Unavailable | ||

| Cluster 3 | 11.3 | Unavailable | |||

| Cluster 4 | 11.3 | ||||

| Cluster 5 | 11.3 |

Legend:

The feature or integration is enabled.

The feature or integration is disabled.