Scalability and Evolvability

ABAC vs RBAC

Do you find yourself spending too much time managing roles and defining permissions in your system? When there are new requests for data, or a policy change, does this cause you to spend an inordinate amount of time to make those changes? Scalability and evolvability will completely remove this burden. When you have a scalable and evolvable data policy management system, it allows you to make changes that impact hundreds if not thousands of tables at once, accurately. It also allows you to evolve your policies over time with minor changes or no changes at all, through future-proof policy logic.

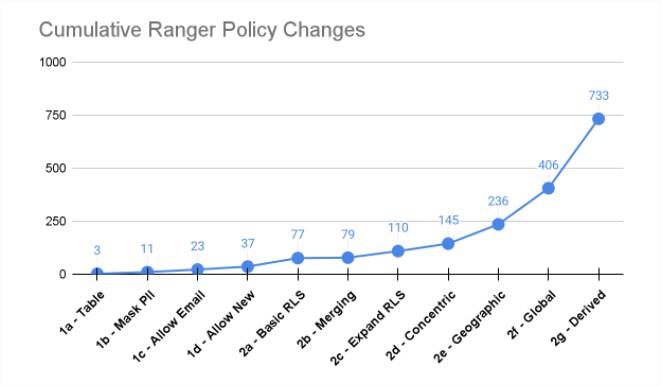

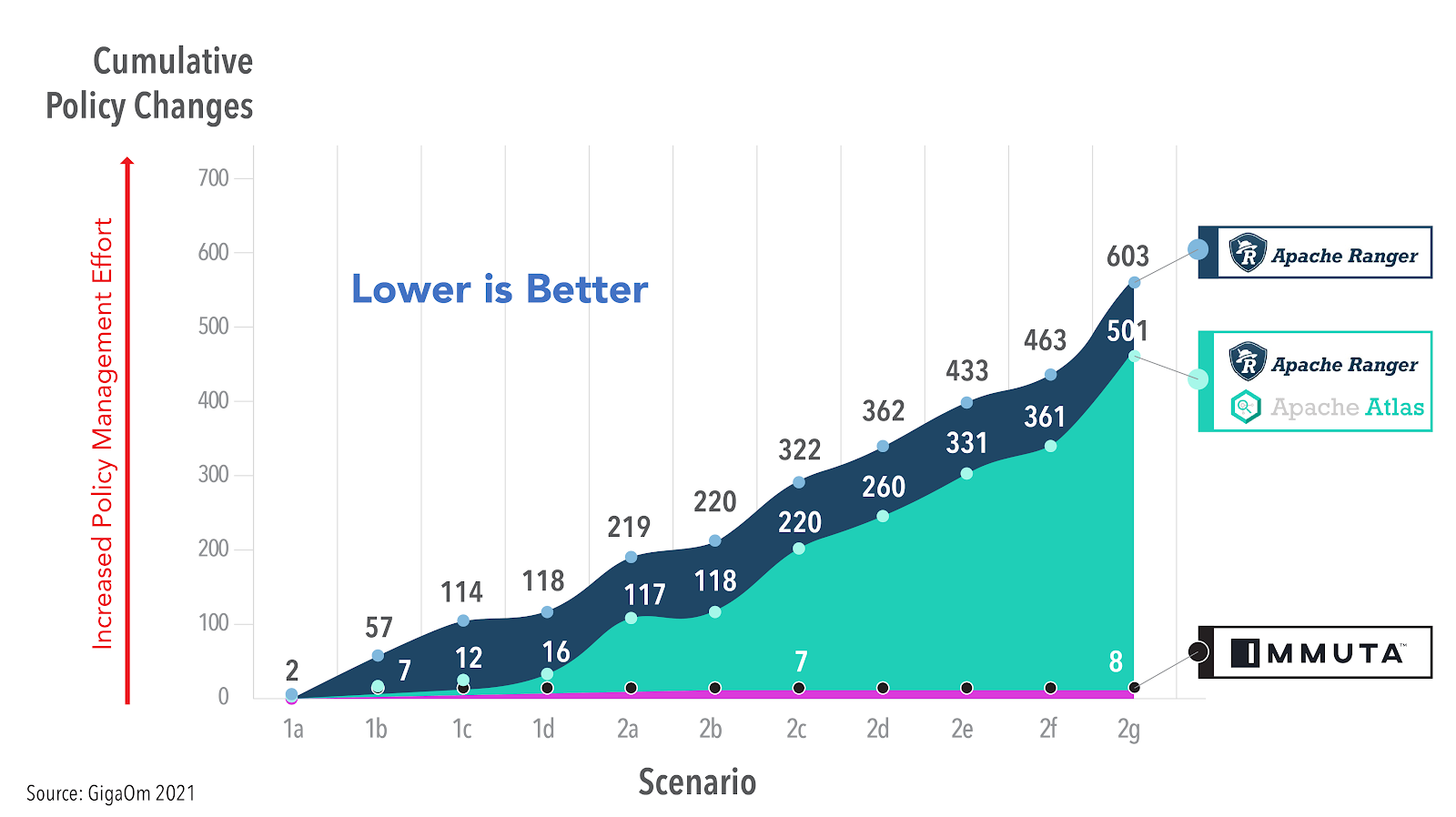

Lack of scalability and evolvability are rooted in the fact that you are attempting to apply a coarse role-based access control (RBAC) model to your modern data architecture. Using Apache Ranger, a well known legacy RBAC system built for Hadoop, as an example, independent research has shown the explosion of management required to do the most basic of tasks with an RBAC system: Apache Ranger Evaluation for Cloud Migration and Adoption Readiness.

In a scalable solution such as Immuta, that count of policy changes required will remain extremely low, providing the scalability and evolvability. GigaOm researched this exactly, comparing Immuta’s ABAC model to what they called Ranger’s RBAC with Object Tagging (OT-RBAC) model and showed a 75 times increase in policy management with Ranger.

https://gigaom.com/report/cloud-data-security/

Value to you: You have more time to spend on the complex tasks you should be spending time on and you don’t fear making a policy change.

Value to the business: Policies can be easily enforced and evolved, allowing the business to be more agile and decrease time-to-data across your organization and avoid errors.

Separating policy definition from role definition

When building access control into our database platforms, the concept of role-based access control (RBAC) is familiar. Roles both define who is in them, but also determine what those roles get access to. A good way to think about this is roles conflate the who and what: who is in them and what they have access to (but lack the why).

In contrast, attribute-based access control (ABAC) allows you to decouple your roles from what they have access to, essentially separating the what and why from the who, which also allows you to explicitly explain the “why” in the policy. This gives you an incredible amount of scalability and understandability in policy building. Note this does not mean you have to throw away your roles necessarily, you can make them more powerful and likely scale them back significantly.

If you remember the picture and article from the start of this introduction, most of the Ranger, Snowflake, Databricks, etc. access control scalability issues are rooted in the fact that it’s an RBAC model vs ABAC model.

Example: Building row-level security with an ABAC model

Consider that you have a table which contains a transaction_country column and you have data localization needs which requires you to limit specific countries to specific users.

With a classic RBAC approach, you would need to create a role for every permutation of country access. Remember that it's not necessarily just a role per country, because some users may need access to more than one country. Every time a new permutation of country combination is required, a new role must be managed to represent that access.

With Immuta's ABAC approach, since Immuta is able to decouple policy logic from users, you can simply assign users countries and Immuta will filter appropriately on the fly. This can be done with a single policy in Immuta which references the user country metadata. If you add a new user with a never before seen combination of countries, in the RBAC model, you would have to remember to create a new role and policy for them to see data. In the ABAC model it will “just work” since everything is dynamic - future proofing your policies.

For more discussion about this model, see the Role-Based Access Control vs. Attribute-Based Access Control — Explained blog or the NIST article on ABAC, Guide to Attribute Based Access Control (ABAC) Definition and Considerations.

Policy boolean logic

The only way to support AND boolean logic with a role-based model (RBAC) is by creating a new role that conflates the two or more roles you want to AND together.

For example, a governor wants users to only see certain data if they have security awareness training and have consumer privacy training. It would be natural to assume you need both separately as metadata attached to users to drive the policy. However, when you build policies in a role based model, it assumes roles are either OR’ed together in the policy logic or you can only act under one role at a time, and because of this, you will have to create a single role to represent this combination of requirements “users with security awareness training AND consumer privacy training.” This is completely silly and unmanageable - you need to account for every possible combination relevant to a policy, and you have no way of knowing that ahead of time.

With Immuta and its ABAC model, you are able to keep user attributes as meaningful separate facts about the users and then use boolean logic to combine those facts in policy logic. As an example, consider the country filtering policy described in the prior section: you could build the filtering, as described, but additionally add an exception such as "do this filtering for everyone except members of group security awareness training and members of group consumer privacy training" without the need to create a new role that represents those combined.

Exception-based policy authoring

This next section draws on an analogy: Imagine you are planning your wedding reception. It’s a rather posh affair, so you have a bouncer checking people at the door.

Do you tell your bouncer who’s allowed in? (exception-based) Or, do you tell the bouncer who to keep out? (rejection-based)

The answer to that question should be obvious, but many policy engines allow both exception- and rejection-based policy authoring, which causes a conflict nightmare. Exception-based policy authoring in our wedding analogy means the bouncer has a list of who should be let into the reception. This will always be a shorter list of users/roles if following the principle of least privilege, which is the idea that any user, program, or process should have only the bare minimum privileges necessary to perform its function - you can’t go to the wedding unless invited. This aligns with the concept of privacy by design, the foundation of the CPRA and GDPR, which states “Privacy as the default setting.”

What this means in practice is that you should define what should be hidden from everyone, and then slowly peel back exceptions as needed.

How could your data leak if it wasn’t exception based?

What if you did two policies:

Mask

Person Nameusing hashing for everyone who possesses attributeDepartment HR.Mask

Person Nameusing constant REDACTED for everyone who possesses attributeDepartment Analytics.

Now, some user comes along who is in Department Finance - guess what, they will see the Person Name columns in the clear because they were not accounted for, just like the bouncer would let them into your wedding because you didn’t think ahead of time to add them to your deny list.

There are two main issues with allowing bi-directional policies, which is why Immuta only allows exception-based policies, aligning to the industry standard of least privileged access:

Ripe for data leaks: Rejection-based policies are extremely dangerous and why Immuta does not allow them except with a catch-all

OTHERWISEstatement at the end. Again this is because if a new role/attribute comes along that you haven’t accounted for, that data will be leaked. It is impossible for you to anticipate every possible user/attribute/group that could possibly exist ahead of time just like it’s impossible for you to anticipate any person off the street that could try to enter your posh wedding that you would have to account for on your deny list.Ripe for conflicts and confusion: Tools that specifically allow both rejection-based and exception-based policy building create a conflict disaster. Let’s walk through a simple example, noting this is very simple, imagine if you have hundreds of these policies:

Policy 1: mask name for everyone who is member of group A

Policy 2: mask name for everyone except members of group B

What happens if someone is in both groups A and B? The policy will have to fall back on policy ordering to avoid this conflict, which requires users to understand all other policies before building their policy and it is nearly impossible to understand what a single policy does without looking at all policies.

Hierarchical tag-based policy definitions

While many platforms support the concept of object tagging / sensitive data tagging, very few truly support hierarchical tag structures.

First, a quick overview of what hierarchical tag structure means:

This would be a flat tag structure:

SUV

Subaru

Truck

Jeep

Gladiator

Outback

Each tag stands on its own and is not associated with one another in any way; there’s no correlation between Jeep and Gladiator nor Subaru and Outback.

A hierarchical tagging structure establishes these relationships:

SUV.Subaru.OutbackTruck.Jeep.Gladiator

Support for a tagging hierarchy is more than just supporting the tag structure itself. More importantly, policy enforcement should respect the hierarchy as well. Let’s run through a quick contrived example; you want the following policies:

Mask by making null any

SUVdataMask using hashing any

Outbackdata

With a flat structure, if you build those policies they will be in conflict with one another. To avoid that problem you would have to order which policies take precedence, which can get extremely complex when you have many policies.

Instead, if your policy engine truly supports a tagging hierarchy, like Immuta does, it will recognize that Outback is more specific than SUV, and have that policy take precedence.

Mask by making null any

SUVdataMask using hashing any

SUV.Subaru.Outbackdata

Policies are applied correctly without any need for complex ordering of policies.

Yes, this does put some work on the business to correctly build specificity, or depth, into their tagging hierarchy. This is not necessarily easy; however, the logic will have to live somewhere, and having it in the tagging hierarchy rather than policy order again allows you to separate policy definition from data definition. This provides you scalability, evolvability, understandability, and, most importantly, correctness because policy conflicts can be caught at policy-authoring-time.

Subscription policies: benefits of attribute-based table GRANTs

There are a myriad of techniques and processes companies use to determine what users should have access to which tables. Some organizations previously had 7 people responding to an email chain for approval before a DBA runs a table GRANT statement, for example. Manual approvals are sometimes necessary, of course, but there’s a lot of power and consistency in establishing objective criteria for gaining access to a table rather than subjective human approvals.

Let’s take the “7 people approve with an email chain” example. Ask the question, “Why do any of you 7 say yes to the user gaining access?” If it’s objective criteria, you can completely automate this process. For example, if the approver says, “I approve them because they are in group x and work in the US,” that is user metadata that could allow the user to automatically gain access to the tables, either ahead of time or when requested. This removes a huge burden from your organization and avoids mistakes.

Being objective is always better than subjective: it increases accuracy, removes bias, eliminates errors, and proves compliance. If you can be objective and prescriptive about who should gain access to what tables - you should.

The anti-pattern is manual approvals. Although there are some regulatory requirements for this, if there’s any possible way to switch to objective approvals, you should do it. With subjective human-driven approvals, there is bias, larger chance for errors, and no consistency - this makes it very difficult to prove compliance and is simply passing the buck (and risk) to the approvers and wasting their valuable time.

One could argue that it’s subjective or biased to assign a user the Country.JP attribute. This is not true, because, remember, data policy is separated from user metadata. The act of giving a user the Country.JP attribute is simply defining that user - it is a fact about that user, there is no implied access given to the user from this act and that attribute will be objective - e.g., you know if they are in Japan or not.

The approach where an access decision is conflated with a role or group is common practice. So not only do you end up with manual approval flows, but you also end up with role explosion from so many roles to meet every combination of access.

Was this helpful?