Trusted Libraries Installation

Audience: System Administrators

Content Summary: This page outlines how to install and configure trusted third-party libraries for Databricks.

1 - Install the Library

-

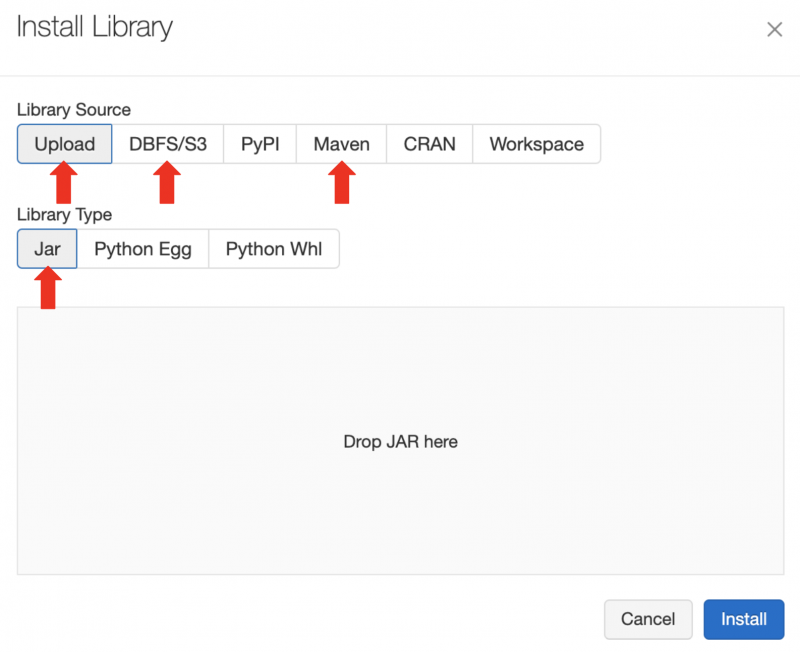

In the Databricks Clusters UI, install your third-party library .jar or Maven artifact with Library Source

Upload,DBFS,DBFS/S3, orMaven. Alternatively, use the Databricks libraries API.

-

In the Databricks Clusters UI, add the

IMMUTA_SPARK_DATABRICKS_TRUSTED_LIB_URISproperty as a Spark environment variable and set it to your artifact's URI:Maven Artifacts

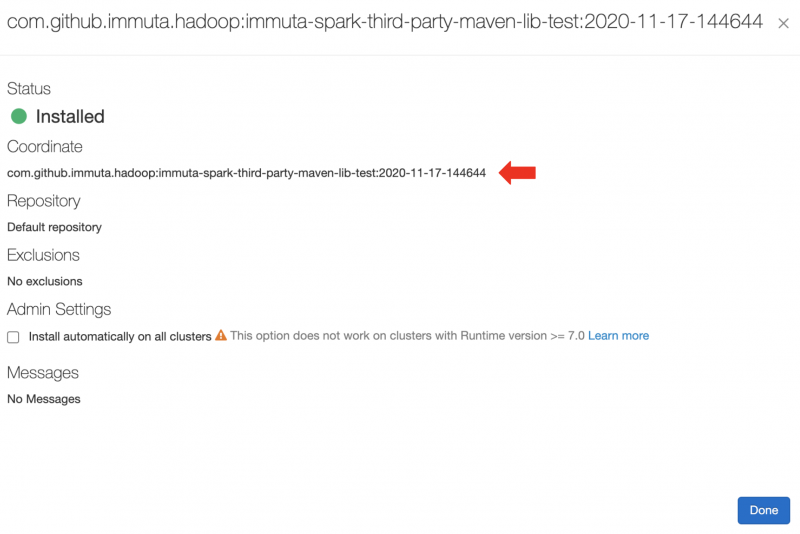

For Maven artifacts, the URI is

maven:/<maven_coordinates>, where<maven_coordinates>is the Coordinates field found when clicking on the installed artifact on the Libraries tab in the Databricks Clusters UI. Here's an example of an installed artifact:

In this example, you would add the following Spark environment variable:

IMMUTA_SPARK_DATABRICKS_TRUSTED_LIB_URIS=maven:/com.github.immuta.hadoop.immuta-spark-third-party-maven-lib-test:2020-11-17-144644.jar Artifacts

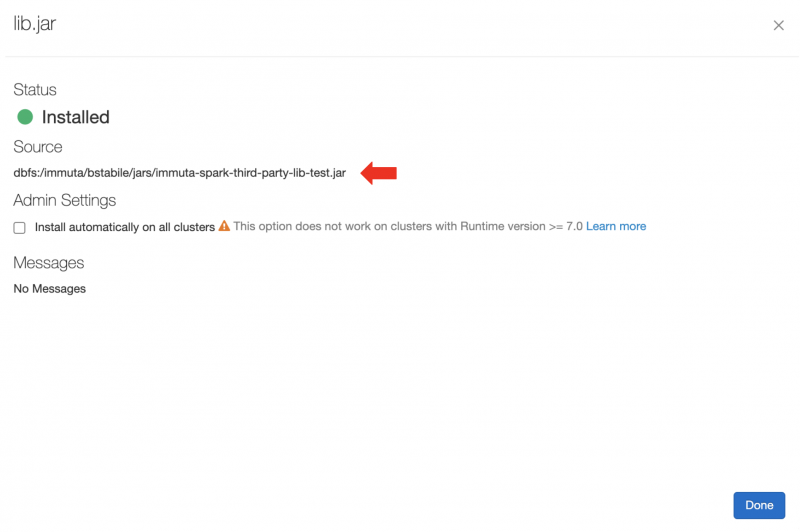

For jar artifacts, the URI is the Source field found when clicking on the installed artifact on the Libraries tab in the Databricks Clusters UI. For artifacts installed from DBFS or S3, this ends up being the original URI to your artifact. For uploaded artifacts, Databricks will rename your .jar and put it in a directory in DBFS. Here's an example of an installed artifact:

In this example, you would add the following Spark environment variable:

IMMUTA_SPARK_DATABRICKS_TRUSTED_LIB_URIS=dbfs:/immuta/bstabile/jars/immuta-spark-third-party-lib-test.jarSpecifying More than One Trusted Library

To specify more than one trusted library, comma delimit the URIs:

IMMUTA_SPARK_DATABRICKS_TRUSTED_LIB_URIS=maven:/my.group.id:my-package-id:1.2.3,dbfs:/path/to/my/library.jar -

Restart the cluster.

2 - Execute a Command in a Notebook

Once the cluster is up, execute a command in a notebook. If the trusted library installation is successful, you should see driver log messages like this:

TrustedLibraryUtils: Successfully found all configured Immuta configured trusted libraries in Databricks.

TrustedLibraryUtils: Wrote trusted libs file to [/databricks/immuta/immutaTrustedLibs.json]: true.

TrustedLibraryUtils: Added trusted libs file with 1 entries to spark context.

TrustedLibraryUtils: Trusted library installation complete.