Test and Deploy Policy

Now that you have a sense of what policies you want to enforce, it is sometimes necessary to first test those policies before deploying them. This is important if you have existing users accessing the tables you are protecting and you want to understand the impact to those users before moving a policy to production. However, consider this step optional.

It's also important to remember that Immuta subscription policies are additive, meaning no existing SELECT grants on tables will be revoked by Immuta when a subscription policy is created. It is your responsibility to revoke all pre-Immuta SELECT grants once you are happy with Immuta's controls.

Use a single Immuta for testing policies

While it may seem wise to have a separate Immuta tenant for development and production mapped to separate development and production data platforms, that is not necessary nor recommended because there are too many variables to account for and keep up-to-date in your data platform and identity management system:

-

Many Immuta data policies are enforced with a heavy reliance on the actual data values. Take for example the following row-level policy:

only show rows where user possesses an attribute in Work Location that matches the value in the column tagged Discovered.Entity.Location. This policy compares the user’s office location to the data in the column taggedLocation, so if you were to test this policy against a development table with incorrect values, it is an invalid test that could lead to false positives or false negatives. -

Similar to #1, if you are not using your real production users, just like having invalid data can get you an invalid test result, so could having invalid or mock user attributes or groups.

-

Policies can (and should) target tables using tags discovered by sensitive data discovery (SDD) or external catalogs (such as Alation or Collibra). For SDD, that means if using development data, the data needs to match the production data so it is discovered and tagged correctly. For external catalogs, that would mean you need your external catalog to have everything in development tagged exactly like it is in production.

-

Users can have attributes and groups from many sources beyond your identity manager, so similar to #3, you would need to have that all synchronized correctly against your development user set as it is synchronized for your production user set.

-

Your development user set may also lack all relevant permutations of access that need to be tested (sets of attributes/groups relevant to the policy logic). These permutations are not knowable a priori because they are dependent on the policy logic. So you would have to create all permutations (or validate existing ones) every time you create a new policy.

-

Lastly, you have a development data environment to test data changes before moving to production. That means your development data environment needs the production policies in place. In other words, policies are part of what needs to be replicated consistently across development environments.

Be aware, this is not to suggest that you don’t need a development data platform environment; that could certainly be necessary for your transformation jobs/testing (which should include policy, per #6). However, for policy testing it is a bad approach to use non-prod data and non-prod users because of the complexity of replicating everything perfectly in development - by the time you’ve done all that, it matches what’s in production exactly.

Best practice for policy testing

Immuta recommends testing against clones of production data, in a separate database, using your production data platform and production user identities with a single Immuta tenant. If you believe you do have a perfectly matching development environment that covers 1-5 in the section above, you can use it (and should for #6), but we still recommend a single Immuta tenant because Immuta allows logical separation of development and production policy work without requiring physically separated Immuta tenants.

Logically separating your data platform

You can skip this section if you believe you have a perfectly matching separate physical development data platform that covers 1-5 in the Use a single Immuta for testing policies section, or are already logically separating your dev and prod data in a single physical data platform.

So how do you test policies without impacting production workloads if we are testing against production? You create a logical separation of development and production in your data platform. What this means is that rather than physically separating your data into completely separate accounts or workspaces (or whatever term your data warehouse may use), logically separate development from production using the mechanisms provided by the data platform - such as databases. This reduces the amount of replication management burden you need to undertake significantly, but does put more pressure on ensuring your policy controls are accurate - which is why you have Immuta!

Many of our customers already take this approach for development and testing in their data platform. Instead of having physically separate accounts or workspaces of their data platform, they create logical separation using databases and table/view clones within the same data platform instance. If you are already doing this, you can skip the rest of this section.

Follow the below recommendations on how to create logical separation of development data in your data platform using the following approaches:

-

Snowflake: Clone your tables that need to be tested to a different development database. While Snowflake will copy the policies with the clone, as soon as you build an Immuta policy on top of it, those policies will take precedence and eliminate the cloned policies, which is the desired behavior.

-

Databricks Unity Catalog: Unity Catalog does not yet support shallow cloning tables with row/column policies, so if there is an existing row/column policy on the table that you intend to edit, rather than shallow cloning, you should

create table as (select…limit 100)to create a real copy of the tables with a limit on the rows copied to a different development database. Make sure you create the table with a user that has full access to the data. If you are building a row security policy, it's recommended to ensure you have a strong distribution of the values in the column that drive the policy. -

Databricks Spark: Shallow clone your tables that need to be tested to a different development database.

-

Redshift: Create new views that are backed by the tables in question to a different development database and register them with Immuta. Since Immuta enforces policies in Redshift using views, creating new views will not be impacted by any existing policy on the tables that back the views.

-

Starburst (Trino): You should virtualize the tables as new tables to a different development catalog in Starburst (Trino) for testing the policy.

-

Azure Synapse Analytics: You should virtualize the tables as new tables to a different development database in Synapse for testing the policy.

If you are managing creation of tables in an automated way, it may be prudent to automatically create the clones/views as described above as part of that process. That way you don’t have to pick and choose tables to logically separate every time you need to do policy work - they are always logically separated already (and presumably discovered and registered by Immuta because they are registered for monitoring as well).

Append the database name to the names of cloned data sources

When you register the clones you’ve created with Immuta, ensure you append the database to your naming convention so you don’t hit any naming conflicts in Immuta.

Since your policies reference tags, not tables, you may be in a situation where it’s unclear which tables you need to logically separate for testing any given policy. To figure this out, you can build reports in Immuta to find which tables have the tags you plan to use in your policies using the What data sources has this tag been assigned to? report. But again, if you create logical separation by default as part of your data engineering process, this is not necessary.

Logically separating Immuta

If using a physically separate development data platform, ensure you have the Immuta data integration configured there as well.

Logical separation in Immuta is managed through Domains. You would want to create a new domain for your cloned tables/views in Immuta and register them in that domain. For example policy 1 test domain. Note you must register your development database in Immuta with schema monitoring turned on (Create sources for all tables in this database and monitor for changes) so any new development tables/views will appear automatically and so you can assign the Domain at creation time.

If you are unable to leverage domains, we recommend adding an additional tag when the data is registered so it can be included when you describe where to target the policy. For example, tag the tables with policy 1 tag.

Ensuring development data is tagged correctly

If using SDD to tag data:

SDD does not discriminate between development and production data; the data will be tagged consistently as soon as it's registered with Immuta, which is another reason we recommend you use SDD for tagging.

If using an external catalog:

- In the case where you are using the out-of-the-box Snowflake tag integration, the clone will carry the tags, which in turn will be reflected on the development data in Immuta.

- If using a custom REST catalog, we recommend enhancing your custom lookup logic to use a naming convention for the development data to ensure the tag sync from the development data is done against the production data. For example, you could strip off a

dev_prefix from the database.schema.table to sync tags from the production version in your catalog. - If using the out-of-the-box integrations with Collibra or Alation, we recommend also creating development tables in those catalogs and tagging them consistently.

If manually tagging:

You must ensure that you manually tag (or over the API) the newly registered development data in Immuta consistent with how it’s tagged in production

Testing a new policy

Once registered, you can build the new policy you want to test in a way that it only targets the tables in that domain. This can be done by either adding that domain as a target for the policy (or the policy tag you added as part of the registration process) if building the policy with a user with GOVERNANCE permission, or ensuring the user building the policy has only manage policies on the development domain (so their policies will be scoped to that domain).

Once the policy is activated (using either technique), it will only apply to the cloned development tables/views because the policy was scoped to that domain/tag, or because the user creating the policy is scoped to managing policies in that domain. At this point, you can invite users that will be impacted by the policy to test the change or your standard set of testers.

To know which users are impacted, ensure you have Detect enabled, and go to People → Overview → Filter: Tag and enter the tags in question to see which users are most active and have a good spread of permutation of access (e.g., some users that gain access and others that lose access). We recommend inviting users that query the tables/views in question the most to test the policy. If you are unable to use Detect, you can visit the audit screen and manually inspect which users have queries against the tables or the internal audit logs of your data platform.

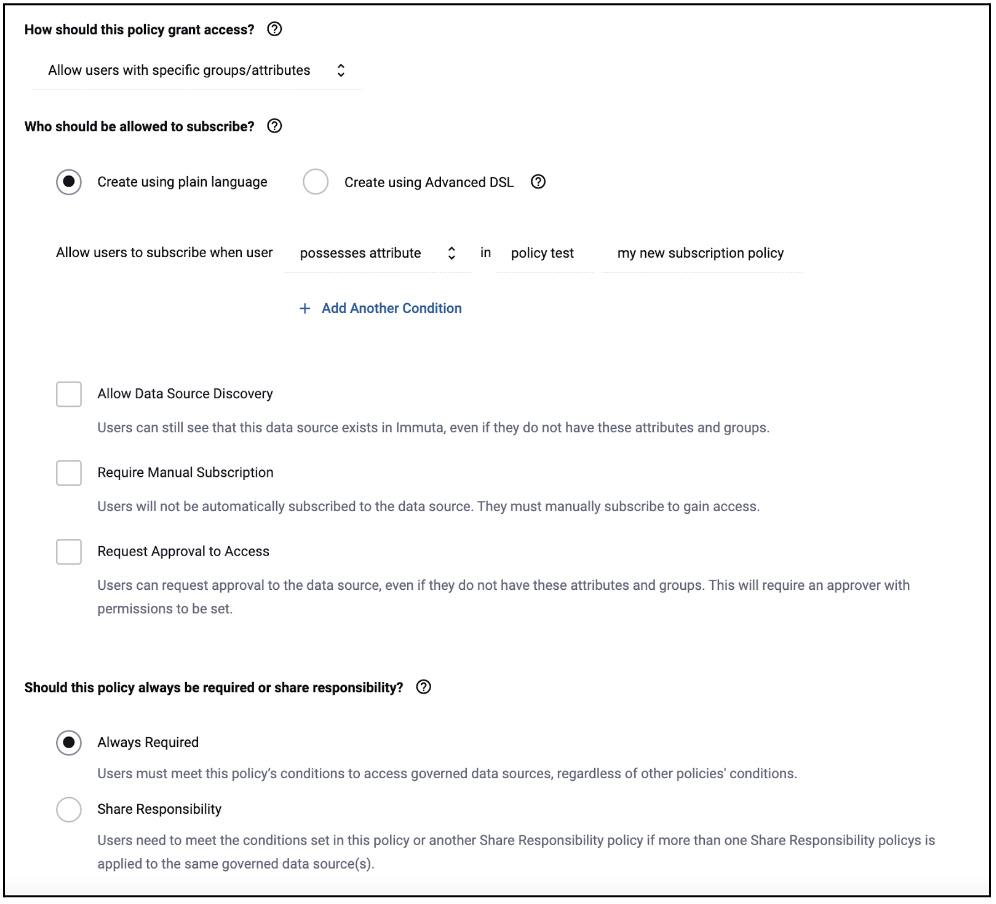

Once you have your testers set, you can give them all an attribute such as policy test: [policy in question] and use it to create a "subscription-testers" subscription policy. It should target only the tables/views in that domain per the steps described in the above paragraph in order to give them access to the development tables/views to test.

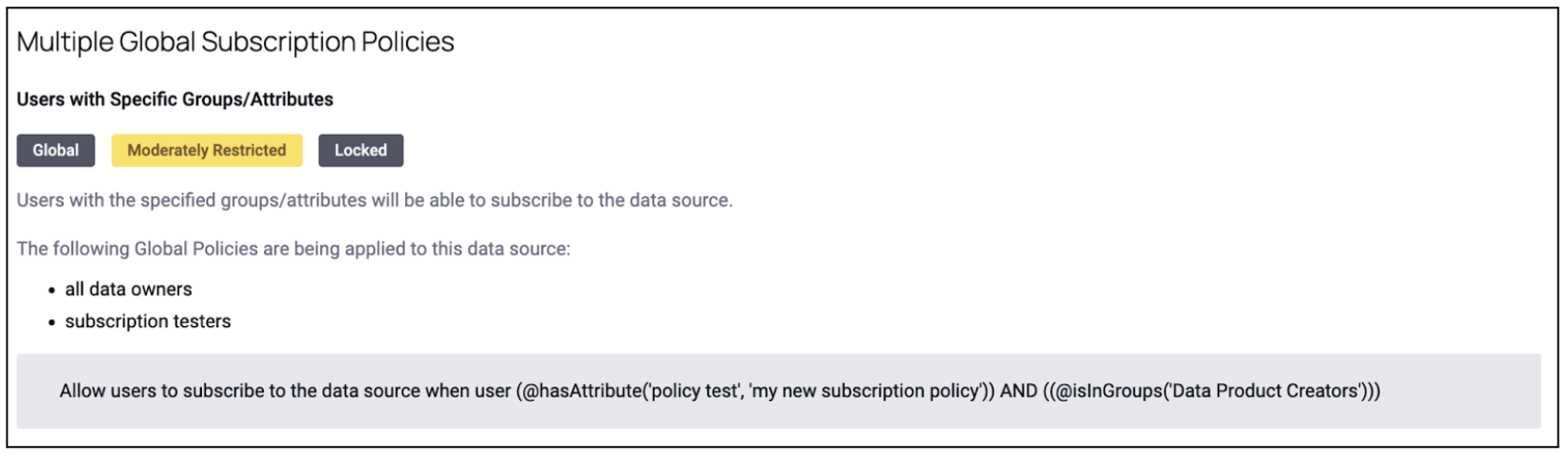

If you are testing a masking or row-level security policy, you don't need to do anything else; it's ready for your policy testers. However, if your ultimate goal is to test a subscription policy, you need to build it separately from the above "subscription-testers" subscription policy, but ensure that you select the Always Required option for "subscription-testers" subscription policy. That means when the "subscription-testers" subscription policy is merged with the real subscription policy you are testing, both policies will be considered and some of your test users may accurately be blocked from the table (which is part of the testing). For example, notice how these two policies (the "subscription-testers" and real policy being tested) were merged with an AND.

There's nothing stopping you from testing a set of changes (many subscription policies, many data policies) all together as well.

Once you’ve received feedback from your test users and are happy with the policy change, you can move on to the deployment of the policy. For example, add prod-domain, remove dev-domain, and then apply the policy. Or build the policy using a user that has manage policy in the production domain.

Testing an edit to an existing policy

The approach here is the same as above; however, before applying your new policy to the development domain, you should first locally disable the pre-existing global policy in the data source. This can be done by going into the development data source in the Immuta UI as the data owner and disabling the policy you plan to change in the policy tab of the data source. This must be done because the clone will have the pre-existing policy applied because it should have all matching tags.

Now you can create the new version of the policy in its place without any conflict.

Policy approvals

Sometimes you may have a requirement that multiple users approve a policy before it can be enabled for everyone. If you are in this situation, you may want to first consider doing this in git (or any source control tool) using our policy-as-code approach, so all approvals can happen in git.