App Settings

Navigate to the App Settings Page

Click the App Settings icon in the navigation menu.

Click the link in the App Settings panel to navigate to that section.

Use Existing Identity Access Manager

See the identity manager pages for a tutorial to connect an Microsoft Entra ID, Okta, or OneLogin identity manager.

To configure Immuta to use all other existing IAMs,

Click the Add IAM button.

Complete the Display Name field and select your IAM type from the Identity Provider Type dropdown: LDAP/Active Directory, SAML, or OpenID.

See the SAML protocol configuration guide.

Immuta Accounts

To set the default permissions granted to users when they log in to Immuta, click the Default Permissions dropdown menu, and then select permissions from this list.

Link External Catalogs

See the External Catalogs page.

Add a Workspace

Select Add Workspace.

Use the dropdown menu to select the Workspace Type and refer to the section below.

Databricks Spark

Databricks cluster configuration

Before creating a workspace, the cluster must send its configuration to Immuta; to do this, run a simple query on the cluster (i.e., show tables). Otherwise, an error message will occur when users attempt to create a workspace.

Databricks API Token expiration

The Databricks API Token used for workspace access must be non-expiring. Using a token that expires risks losing access to projects that are created using that configuration.

Use the dropdown menu to select the Schema and refer to the corresponding tab below.

Required AWS S3 Permissions

When configuring a workspace using Databricks with S3, the following permissions need to be applied to arn:aws:s3:::immuta-workspace-bucket/workspace/base/path/* and arn:aws:s3:::immuta-workspace-bucket/workspace/base/path Note: Two of these values are found on the App Settings page; immuta-workspace-bucket is from the S3 Bucket field and workspace/base/path is from the Workspace Remote Path field:

s3:Get*

s3:Delete*

s3:Put*

s3:AbortMultipartUpload

Additionally, these permissions must be applied to arn:aws:s3:::immuta-workspace-bucket Note: One value is found on the App Settings page; immuta-workspace-bucket is from the S3 Bucket field:

s3:ListBucket

s3:ListBucketMultipartUploads

s3:GetBucketLocation

Enter the Name.

Click Add Workspace

Enter the Hostname.

Opt to enter the Workspace ID (required with Azure Databricks).

Enter the Databricks API Token.

Use the dropdown menu to select the AWS Region.

Enter the S3 Bucket.

Opt to enter the S3 Bucket Prefix.

Click Test Workspace Bucket.

Once the credentials are successfully tested, click Save.

Enter the Name.

Click Add Workspace.

Enter the Hostname, Workspace ID, Account Name, Databricks API Token, and Storage Container.

Enter the Workspace Base Directory.

Click Test Workspace Directory.

Once the credentials are successfully tested, click Save.

Enter the Name.

Click Add Workspace.

Enter the Hostname, Workspace ID, Account Name, and Databricks API Token.

Use the dropdown menu to select the Google Cloud Region.

Enter the GCS Bucket.

Opt to enter the GCS Object Prefix.

Click Test Workspace Directory.

Once the credentials are successfully tested, click Save.

Add An Integration

Select Add Integration.

Use the dropdown menu to select the Integration Type.

To enable Azure Synapse Analytics, see the Azure Synapse Analytics configuration page.

To enable Databricks Spark, see the Configure a Databricks Spark integration page.

To enable Databricks Unity Catalog, see the Getting started with the Databricks Unity Catalog integration page

To enable Redshift, see Redshift configuration page.

To enable Snowflake, see the Snowflake configuration page.

To enable Starburst, see the Starburst configuration page.

Global Integration Settings

Snowflake Audit Sync Schedule

Requirements: See the requirements for Snowflake audit on the Snowflake query audit logs page.

To configure the audit ingest frequency for Snowflake,

Click the App Settings icon in the navigation menu.

Navigate to the Global Integration Settings section and within that the Snowflake Audit Sync Schedule.

Enter an integer into the textbox. If you enter 12, the audit sync will happen once every 12 hours, so twice a day.

Databricks Unity Catalog Configuration

Audit

Requirements: See the requirements for Databricks Unity Catalog audit on the Databricks Unity Catalog query audit logs page.

To configure the audit ingest frequency for Databricks Unity Catalog,

Click the App Settings icon in the navigation menu.

Navigate to the Global Integration Settings section and within that the Databricks Unity Catalog Configuration.

Enter an integer into the textbox. If you enter 12, the audit sync will happen once every 12 hours, so twice a day.

Additional privileges required for access

By default, Immuta will revoke Immuta users' USE CATALOG and USE SCHEMA privileges in Unity Catalog for users that do not have access to any of the resources within that catalog/schema. This includes any USE CATALOG or USE SCHEMA privileges that were granted outside of Immuta.

To disable this setting,

Click the App Settings icon in the navigation menu.

Navigate to Global Integration Settings > Databricks Unity Catalog Configuration.

Click the Revoke additional privileges required for access checkbox to disable the setting.

Click Save.

See the Databricks Unity Catalog reference guide for details about this setting.

Manage Data Providers

You can enable or disable the types of data sources users can create in this section. Some of these types will require you to upload a driver before they can be enabled. The list of currently supported drivers is on the ODBC Drivers page.

To enable a data provider,

Click the menu button in the upper right corner of the provider icon you want to enable.

Select Enable from the dropdown.

If a driver needs to be uploaded,

Click the menu button in the upper right corner of the provider icon, and then select Upload Driver from the dropdown.

Click in the Add Files to Upload box and upload your file.

Click Close.

Click the menu button again, and then select Enable from the dropdown.

Enable Email

Application Admins can configure the SMTP server that Immuta will use to send emails to users. If this server is not configured, users will only be able to view notifications in the Immuta console.

To configure the SMTP server,

Complete the Host and Port fields for your SMTP server.

Enter the username and password Immuta will use to log in to the server in the User and Password fields, respectively.

Enter the email address that will send the emails in the From Email field.

Opt to Enable TLS by clicking this checkbox, and then enter a test email address in the Test Email Address field.

Finally, click Send Test Email.

Once SMTP is enabled in Immuta, any Immuta user can request access to notifications as emails, which will vary depending on the permissions that user has. For example, to receive email notifications about group membership changes, the receiving user will need the GOVERNANCE permission. Once a user requests access to receive emails, Immuta will compile notifications and distribute these compilations via email at 8-hour intervals.

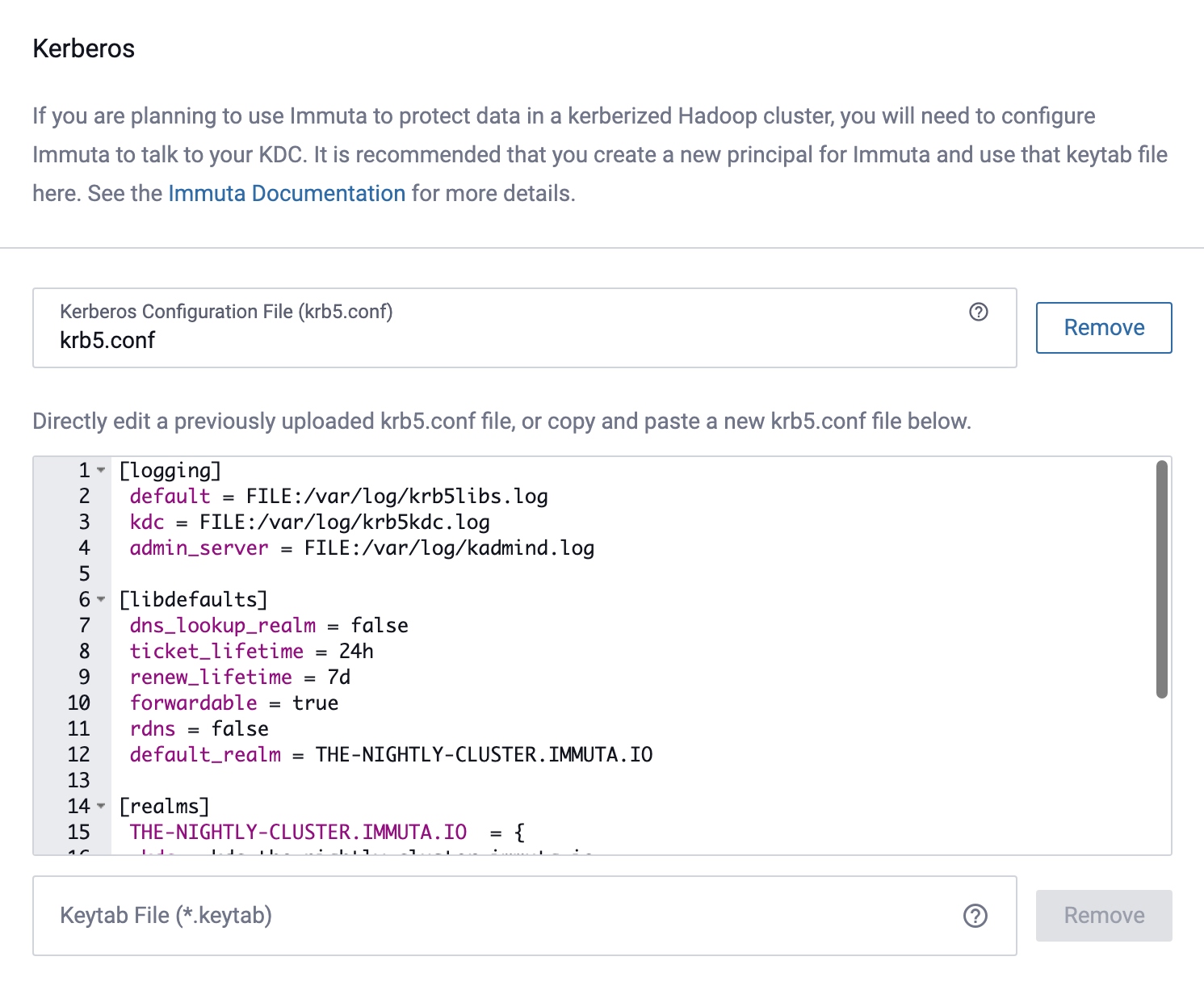

Initialize Kerberos

To configure Immuta to protect data in a kerberized Hadoop cluster,

Upload your Kerberos Configuration File, and then you can modify the Kerberos configuration in the window pictured below.

Upload your Keytab File.

Enter the principal Immuta will use to authenticate with your KDC in the Username field. Note: This must match a principal in the Keytab file.

Adjust how often (in milliseconds) Immuta needs to re-authenticate with the KDC in the Ticket Refresh Interval field.

Click Test Kerberos Initialization.

Generate System API Key

Click the Generate Key button.

Save this API key in a secure location.

Configure HDFS Cache Settings

To improve performance when using Immuta to secure Spark or HDFS access, a user's access level is cached momentarily. These cache settings are configurable, but decreasing the Time to Live (TTL) on any cache too low will negatively impact performance.

To configure cache settings, enter the time in milliseconds in each of the Cache TTL fields.

Set Public URLs

You can set the URL users will use to access Immuta Application. Note: Proxy configuration must be handled outside Immuta.

Complete the Public Immuta URL field.

Click Save and confirm your changes.

Audit Settings

Enable Exclude Query Text

By default, query text is included in query audit events from Snowflake, Databricks, and Starburst (Trino).

When query text is excluded from audit events, Immuta will retain query event metadata such as the columns and tables accessed. However, the query text used to make the query will not be included in the event. This setting is a global control for all configured integrations.

To exclude query text from audit events,

Scroll to the Audit section.

Check the box to Exclude query text from audit events.

Click Save.

Configure Governor and Admin Settings

These options allow you to restrict the power individual users with the GOVERNANCE and USER_ADMIN permissions have in Immuta. Click the checkboxes to enable or disable these options.

Create Custom Permissions

You can create custom permissions that can then be assigned to users and leveraged when building subscription policies. Note: You cannot configure actions users can take within the console when creating a custom permission, nor can the actions associated with existing permissions in Immuta be altered.

To add a custom permission, click the Add Permission button, and then name the permission in the Enter Permission field.

Create Custom Data Source Access Requests

To create a custom questionnaire that all users must complete when requesting access to a data source, fill in the following fields:

Opt for the questionnaire to be required.

Key: Any unique value that identifies the question.

Header: The text that will display on reports.

Label: The text that will display in the questionnaire for the user. They will be prompted to type the answer in a text box.

Create Custom Login Message

To create a custom message for the login page of Immuta, enter text in the Enter Login Message box. Note: The message can be formatted in markdown.

Opt to adjust the Message Text Color and Message Background Color by clicking in these dropdown boxes.

Prevent Automatic Table Statistics

Without fingerprints some policies will be unavailable.

These policies will be unavailable until a data owner manually generates a fingerprint for a Snowflake data source:

Masking with format preserving masking

Masking using randomized response

To disable the automatic collection of statistics with a particular tag,

Use the Select Tags dropdown to select the tag(s).

Click Save.

Randomized response

Support limitation: This policy is only supported in Snowflake integrations.

When a randomized response policy is applied to a data source, the columns targeted by the policy are queried under a fingerprinting process. To enforce the policy, Immuta generates and stores predicates and a list of allowed replacement values that may contain data that is subject to regulatory constraints (such as GDPR or HIPAA) in Immuta's metadata database.

The location of the metadata database depends on your deployment:

Self-managed Immuta deployment: The metadata database is located in the server where you have your external metadata database deployed.

SaaS Immuta deployment: The metadata database is located in the AWS global segment you have chosen to deploy Immuta.

To ensure this process does not violate your organization's data localization regulations, you need to first activate this masking policy type before you can use it in your Immuta tenant.

Click Other Settings in the left panel and scroll to the Randomized Response section.

Select the Allow users to create masking policies using Randomized Response checkbox to enable use of these policies for your organization.

Click Save and confirm your changes.

Advanced Settings

Preview Features

If you enable any Preview features, provide feedback on how you would like these features to evolve.

Complex Data Types

Click Advanced Settings in the left panel, and scroll to the Preview Features section.

Check the Allow Complex Data Types checkbox.

Click Save.

Advanced Configuration

Advanced configuration options provided by the Immuta Support team can be added in this section. The configuration must adhere to the YAML syntax.

Update the Time to Webhook Request Timeouts

Expand the Advanced Settings section and add the following text to the Advanced Configuration to specify the number of seconds before webhook requests timeout. For example use

30for 30 seconds. Setting it to0will result in no timeout.Click Save.

Update the Audit Ingestion Expiration

Expand the Advanced Settings section and add the following text to the Advanced Configuration to specify the number of minutes before an audit job expires. For example use

300for 300 minutes.Click Save.

Last updated

Was this helpful?