Install a Trusted Library

IMMUTA_SPARK_DATABRICKS_TRUSTED_LIB_URIS=maven:/my.group.id:my-package-id:1.2.3

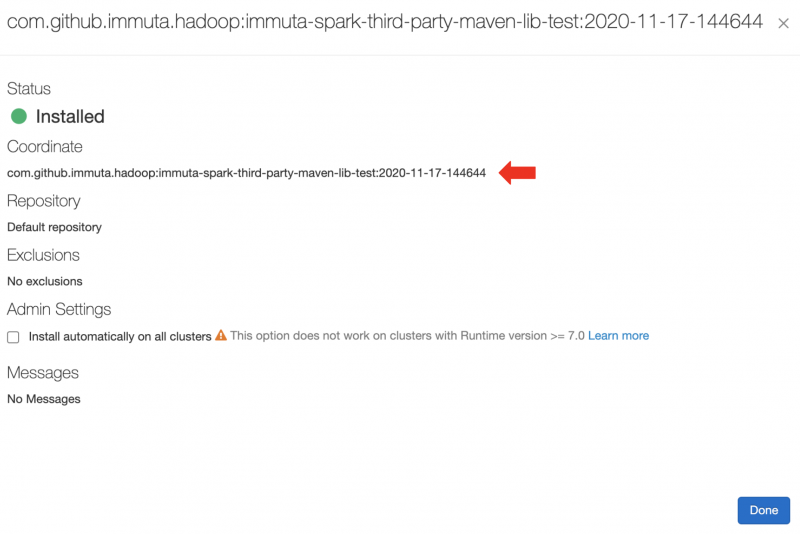

IMMUTA_SPARK_DATABRICKS_TRUSTED_LIB_URIS=maven:/com.github.immuta.hadoop.immuta-spark-third-party-maven-lib-test:2020-11-17-144644

Last updated

Was this helpful?